The Educational Program Assessment (EPA) team provides expertise in the design, implementation, analysis, and reporting of assessments that explore the impact of teaching and learning programs on campus.

What is Educational Program Assessment?

Educational program assessment is the process of systematically collecting, analyzing, and using data to understand and improve the activities and outcomes of a program. In educational contexts, program assessments usually extend beyond a single course to look at overarching questions related to educational initiatives.

They can be used to:

- Identify methods of improving the approach or quality of educational activities

- Provide feedback to students, faculty, and administrators about their efforts

- Ensure that programs are meeting their aims and functioning well

The Collaborative Assessment Model (CAM)

The Collaborative Assessment Model is an interdisciplinary, evidence-driven approach. It involves establishing shared goals, gathering data, analyzing the results, and considering what actionable measures can be taken to create a successful assessment.

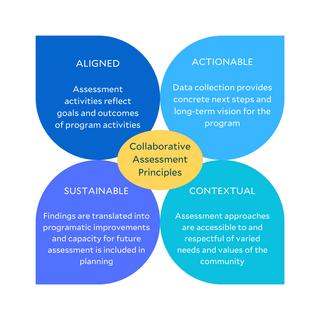

Our work is guided by this model, which emphasizes assessments that are:

- Aligned

- Actionable

- Contextual

- Sustainable

(Bathgate & Claydon, 2021)

Strong communication with program leaders, which allows reflection and iteration based on the data, is a hallmark of this model. The Collaborative Assessment Model defines principles of strong data collection and relationship building within higher education settings.

ALIGNED: This means that the assessment goals are well-articulated and all data collected tie back to these goals in specific ways without missing any key outcomes.

ACTIONABLE: This means that the data collected help explain the reasons behind why a program is functioning well or where it may need improvement.

SUSTAINABLE: This means that the process of rerunning assessments, where needed, are planned for in advance to allow program leaders to carry on data collection.

CONTEXT: This means considering the tone of a program, its relationship with participants, and the perspective of learners.

What does Educational Program Assessment look like at the Poorvu Center?

Our assessment work is built on strong collaborations and educational research design that emphasizes mixed methodologies. We use our team’s backgrounds in learning science, cognitive psychology, and neuroscience to inform this work.

We balance the rigor of established assessment approaches with the realistic expectation that data collection needs to be responsive to a program’s dynamic needs. We prioritize embedded and nimble methods that emphasize sustainability and avoid heavy lifts from participants, instructors, and program leaders.

Most projects begin with a consultation with us where you can share ideas and meet a member of our team. Strong communication with program leaders that allows reflection and iteration based on the data is a hallmark of this model.

We’re here to help!

Reach out to the Poorvu Center team if you have any questions or to learn more about our programs.