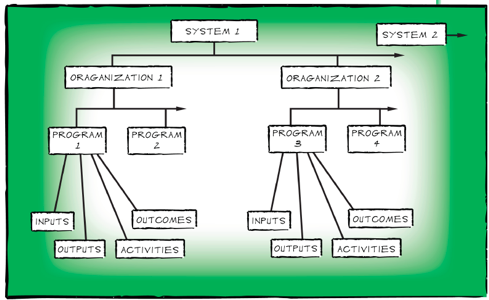

The Systems Evaluation Protocol (SEP) was developed in 2012 as a standardized procedure developed primarily in relation to STEM education programs, and draws on literature from evaluation theory, systems theory and evolutionary epistemology. SEP operates with the premise that sets of activities or programs are part of organizational structures, which are also part of larger networks and systems.[1]

For example, a course’s curriculum is part of a specific department, which is part of an institution, which is part of the higher education system. SEP proposes that each program can then be broken into segments of inputs, outputs, activities and outcomes.

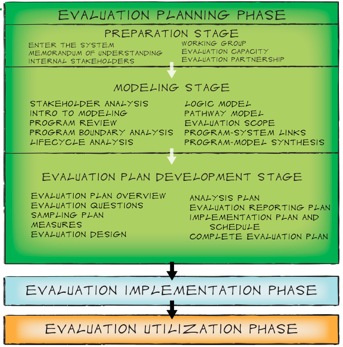

Process for SEP- SEP is comprised of three phases: planning, implementation, and utilization.

Phase 1: Planning

An evaluation specialist tends to be more heavily involved in the planning phase, which can further be broken down into three distinct stages.

Preparation -

The preparation stage provides space for key stakeholders to develop guidelines for the working relationship by writing a memorandum of understanding-or a guide of the roles and expectations of the group members. It can also articulate the program goals and time commitments expected of the working group.

Modeling -

The modeling stage begins the process of a program review, by identifying the scope of the evaluation, providing a space for reflection and synthesis across activities, and the creation of logic and pathway models.

- A logic model examines the program’s context and background, the inputs-such as resources and its budget, the activities-such as the program components, the outputs or what products or knowledge participants learn during the program, and short-, medium-, and long-term goals or outcomes of having participated in the program.

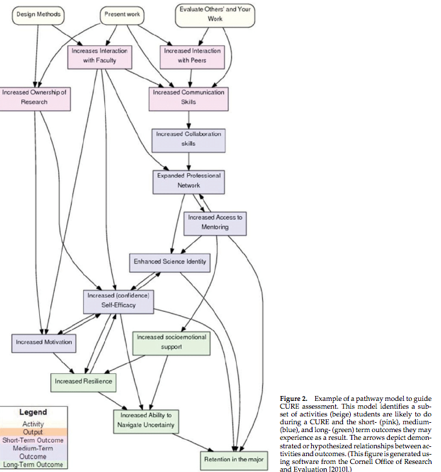

- The pathway model takes a further step to visually depict the logical connections between activities and goals. When completed, the pathway model provides a concise map of how a program’s parts are thought to work together.By examining what parts are connected, the evaluator can also see what parts do not connect, and identify what assumptions are being made in the parts that are connected. These types of insight provide a deeper look at how the program is operating and creates space for revision.

Evaluation Plan development -

In the evaluation plan development stage, a purpose statement is designed along with the evaluation questions, the measures to be used with measurement strategies, selecting an appropriate evaluation design, and developing an analysis and implementation plan. Once completed, the SEP guide has provided the framework for a final evaluation plan that can be implemented by the working group of the program.

Phase 2: Implementation - Conduct the evaluation

- Gather the data-according to the plan you developed.

- Analyze the data- Depending on the type of data you collected, select appropriate statistical analyses that allow you to understand what the data are demonstrating.

- Report the results to program stakeholders- Identify the audience and write an evaluation report. Include a description of the program as well as the results.

Phase 3: Utilization - Revise the program and/or evaluation plan for continuous improvement

Evaluating a program is not a one-time process. When done well, it opens a continuous procedure for measurement within the program.

[1]Cornell Office for Research on Evaluation and the Montclair State Developmental Systems Science and Evaluation Research Lab. (2012). The Guide to the Systems Evaluation Protocol. Ithaca, NY.

[2]Corwin, L.A., Laursen, S.L., Branchaw, J.L., & Eagan, K. (2014). Assessment of course-based undergraduate research experiences: A Meeting Report, CBE-Life Sciences Education, 13, 29-40.

[2]

[2]